Intervention

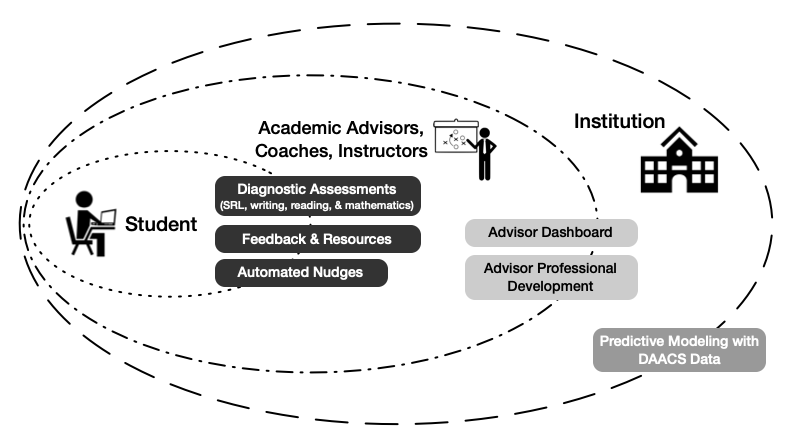

DAACS is a suite of open source, online assessments and supports (both technological and social) designed to optimize student learning (see https://daacs.net/). DAACS has five main components (Figure 1): (1) diagnostic assessments that permit valid and reliable inferences of students’ readiness for college in terms of self-regulated learning, reading, writing, and mathematics; (2) performance feedback with recommended strategies and links to open educational resources, (3) nudges that prompt students to engage in self-directed preparation for college-level academic work; (4) trained academic advisors who help students build on strengths while addressing areas of weakness identified by the assessments; and (5) predictive models that identify students at risk, as well as specific risk factors. Detailed descriptions of each component are provided after the following summary of initial research results.

Figure 1. DAACS Framework and Components

Figure 1. DAACS Framework and Components

The associations between success in college and DAACS usage by students and advisors suggest that DAACS served its intended purposes for the students who were motivated to use it, while other students needed encouragement from the system and advisors (Bryer et al., 2019). In response, we developed several enhancements, including the SRL Lab (https://srl.daacs.net); a variety of nudges that prompt students to complete the assessments (Franklin et al., 2019), use the resources, and communicate with their advisors; and enhanced advisor training (Slemp et al., 2019) with an advising dashboard that succinctly summarizes students’ DAACS results and recommendations. DAACS is now ready for a rigorous test of its efficacy in promoting student success in terms of credit completion and retention, as well as its predictive power and cost effectiveness. In the remainder of this section we present detailed descriptions of the DAACS system, as well as evidence of validity, reliability, and efficacy, as appropriate (Table 1).

Many college students lack sufficient awareness of their learning strengths and weaknesses and the academic demands of college studies (Zimmerman et al., 2011). To enhance students’ knowledge of their academic and non-academic skills, DAACS includes diagnostic assessments of disciplinary content (reading, math, and writing) as well as SRL skills (metacognition, strategy use, motivation). Unlike placement exams, which provide only a pass/fail score and are used to place students into remedial courses, these diagnostic assessments provide students with information about their strengths and weaknesses prior to beginning college so they can build up weak areas while taking credit-bearing courses.

SRL Survey. The SRL survey consists of 62 Likert-type items adapted from established SRL measures (Cleary, 2006; Driscoll, 2007; Dugan & Andrade, 2011; Dweck, 2006; Schraw & Dennison, 1994). The items cover three domains: metacognition, motivation, and learning strategies. The SRL assessment has excellent psychometric qualities, suggesting inferences drawn from the survey scores are valid and reliable (Lui et al., 2018).

Writing assessment. The writing assessment asks students to summarize their SRL survey results, identify specific strategies for improving their SRL, and commit to using them. Thus, the writing assessment not only assesses writing skills, but also engages students in reflecting on and planning to develop their skills in SRL. An open source, automated essay scoring program was trained to reliably score the writing assessments in terms of nine criteria related to effective college-level writing (Yagelski, 2015) and provide students with feedback within one minute (Akhmedjanova et al., 2019; Andrade et al., 2018).

Mathematics and reading assessments. The mathematics and reading assessments are computer-adaptive tests with 18 to 24 multiple choice items adapted from state-mandated high school English language arts and mathematics exams, which are useful for identifying college readiness (Han, 2003; Jirka & Hambleton, 2005; Massachusetts Department of Elementary and Secondary Education, 2017; New York State Education Department, 2014a, 2014b). The DAACS reading and mathematics assessments have acceptable psychometric properties, including convergent and discriminant validity evidence, and acceptable internal consistency estimates.

Table 1. Four DAACS Assessment Domains and Sub-Domains

| Domain | Sub-domains | Reliability |

|---|---|---|

| Self-regulated learning | Metacognition, motivation (anxiety, goal orientation, self-efficacy), learning strategies (help seeking, managing time, managing environment, strategies for understanding), mindset | α = .79 - .91 |

| Writing | Content, organization, paragraphs, sentences, conventions | Average LightSide-human IRR=66.3% |

| Mathematics | Word problems, geometry, variables and equations, numbers and calculations, lines and functions | α = .69 |

| Reading | Ideas, inference, language, purpose, structure | α = .67 |

Three components of DAACS were designed to promote self-directed learning: (1) the immediate feedback students receive upon completing the diagnostic assessments, (2) links to Open Educational Resources (OERs) related to individual students’ results, and (3) nudges, or periodic encouragement to take advantage of the feedback, resources, and academic advisors. Details and sample feedback are provided in Table 2 and Appendix D, respectively.

Immediate feedback. The feedback and resources provided to students by DAACS is an especially powerful and unique aspect of its design. Consistent with findings from research on formative feedback (e.g., Hattie & Timperley, 2007; Meer & Dawson, 2018; Shute, 2008; Wiliam & Thompson, 2007), DAACS feedback can increase student awareness of discrepancies between their current and desired skill levels, and provide suggestions about how to improve. As a result, students have a greater likelihood of enhancing performance and succeeding in school. Furthermore, feedback that guides adaptation is a hallmark of SRL theories (Efklides, 2011; Winne & Hadwin, 1998; Zimmerman, 2000), most of which depict SRL as a goal-directed, cyclical process whereby individuals set goals, plan, enact learning strategies, deploy monitoring techniques, and then evaluate and adapt (Boekaerts et al., 2000). DAACS represents a structured assessment-to-feedback system designed to enhance regulatory skills.

Open Educational Resources. Two new OERs were created with the support of the FIPSE FITW grant: the SRL Lab (srl.daacs.net) and the Reading Comprehension Lab (owl.excelsior.edu/orc). A library of pre-existing math-related OERs was also curated. Institution-specific resources, such as the Online Writing Lab, are also linked to feedback.

Table 2. Four DAACS Assessment Domains, Sample Feedback and Resources

| Domain | Sample Feedback | Sample Resources |

|---|---|---|

| Self-regulated learning | Mindset: “The SRL assessment results suggest that you have a fixed mindset, meaning you tend to believe your intelligence cannot be changed over time. Although you might have a fixed mindset right now, you can change it to a growth mindset. That is, you can learn to think and act like your intelligence can be improved with effort.” | Self-Regulated Learning Lab: https://srl.daacs.net/ |

| Writing | Transitions: “Your writing was scored at the developing level for transitions between paragraphs, which were missing or ineffective. Paragraphs tended to abruptly shift from one idea to the next.” | Excelsior College’s Online Writing Lab: https://owl.excelsior.edu |

| Mathematics | Statistics: “Your results suggest that you have emerging skills for reasoning with data. To further develop your skills at summarizing data with statistics, graphs, and tables, these resources might be a good starting point: …” | Math is Fun: https://www.mathsisfun.com/ |

| Reading | Inferences: “Your results suggest an area of improvement for you is reading closely to determine implied meaning. A skill you may want to improve is the ability to draw logical inferences from what texts explicitly say to determine the implied meaning. An inference is…” | Reading Comprehension Lab: https://owl.excelsior.edu/orc/ |

There is a modest but promising body of research on the effectiveness of OERs for improving student outcomes and reducing higher education costs (Hilton, 2016; Hilton et al., 2014). In a research review, Hilton (2016) found that students who use OERs generally have better or equal learning outcomes as compared to students using traditional learning methods. These results have been demonstrated across a variety of subjects and outcome variables. The review also indicated that student and faculty perceptions of OERS are very positive, with most preferring OERs over traditional learning materials. While there have been promising results regarding the effectiveness of OERs, some studies have found null effects (Grimaldi et al., 2019). While OERs are at least as effective as traditional, expensive learning materials such as textbooks, these resources can only have an effect if students actually access them. The nudges feature of DAACS was designed to increase student engagement with OERs.

A major finding from our FIPSE study indicated that DAACS is only helpful to students who choose to not only take the assessments, but also access the feedback and resources (see Figures 2 and 3). In response to these findings, we developed and tested nudges to encourage more students to take advantage of the wealth of information and resources available to them via the DAACS. To nudge is “to alert, remind, or mildly warn another” (Thaler & Sunstein, 2008, p. 4). The nudges were informed by studies that demonstrated their effectiveness in influencing behavior. For example, the U.K. Nudge Unit sent letters to individuals who had not paid their taxes, the most effective of which read, “Nine out of ten people in the U.K. pay their taxes on time. You are currently in the very small minority of people who have not paid us yet.” Within 23 days, there was an increase of 15% in the number of people paying their taxes (Halpern, 2015). Similar nudges based on social norms have been shown to be effective in improving organ donor registrations (Thaler & Sunstein, 2008), decreasing cigarette smoking on college campuses (Perkins, 2003), and increasing elementary school students’ use of deliberate practice (Eskreis-Winkler et al., 2016).

Reminders are a type of nudge that prompts students to turn their attention to a particular problem or task, gives them easy access to information, and/or reminds them of the benefits of completing a task (Damgaard & Nielsen, 2018). These types of nudges have had a positive effect on several educational outcomes, including college enrollment for low income and first-generation students (Castleman & Page, 2017). Informational nudges aim to improve student outcomes by providing information about their behavior and ability, or by encouraging students to overcome barriers (Damgaard & Nielsen, 2018). Informational nudges aimed at improving students’ grit (Alan, Boneva, & Ertac, 2016), planning (De Paola & Scoppa, 2015; Yeomans & Reich, 2017), goal setting (Alan et al., 2016; De Paola & Scoppa, 2015), and time management (Bettinger & Baker, 2014; De Paola & Scoppa, 2015) have had positive effects on academic outcomes. Two of our nudges reflect social norms: They inform students of either the percentage of students from their school who have completed DAACS or the higher success rate of students who use DAACS. We also developed three reminder and informational nudges, including one that reminds students to re-read the essay they wrote for the writing assessment in order to recall the SRL strategies they committed to using; one that has a link to feedback on a domain on which they scored particularly low or high; and one encouraging students to complete the DAACS. Nudges are sent via email and include convenient links to the DAACS. The nudges resulted in a significant increase in students’ use of the DAACS (2 = 7.7, p < 0.05) and the feedback it provides (2 = 14.2, p < 0.01) (Franklin et al., 2019).

Students in postsecondary education are typically assigned an academic advisor who assists in course planning and problem solving (Bailey et al., 2016; Grubb, 2001). DAACS was designed to be an advising tool that enables advisors to access information about students’ academic strengths and weaknesses, use the information to focus advising conversations, and help students set actionable goals. A few studies that meet the WWC recommendations without reservations reported that college students who participated in enhanced advisement were likely to accumulate more credits than students in control groups (Bailey et al., 2016; Cousert, 1999; Scrivener & Weiss, 2013; Visher et al., 2010). In order to enhance advising, DAACS has a new advisor dashboard, and comprehensive professional development.

Advisor dashboard. BAU advising at our partner institutions requires students to meet with an advisor at least once per semester. Students in the treatment condition will meet with advisors who have easy access to the online dashboard in order to facilitate the use of DAACS results by advisors (Appendix D, pp. 9-10). The initial page presents a student’s scores on each assessment, as well as top strengths and weaknesses. Advisors can also easily access detailed information related to student outcomes, such as specific item responses, and can recommend strategies or links to appropriate resources.

Advisor professional development (PD). In-person and online trainings will enhance advisors’ knowledge of DAACS and the ways in which it can be used to promote student success (Appendix C-2). An emphasis is placed on the application of SRL strategies to academic contexts. The SRL workshop to be provided during the proposed study is an updated version of the workshop administered as part of the FIPSE grant. Evaluations of 36 advisors receiving the three-hour SRL workshop revealed statistically significant increases in their knowledge of SRL and self-efficacy for helping students (Cleary et al., 2019). The new version of the PD will involve advisors in considering case studies based on actual students, and role playing DAACS-based advising sessions. Advisors will also be invited to engage in action research related to inquiry questions they develop, supported by the DAACS research team. Regular contact between the team and advisors and their supervisors will allow for trouble-shooting, as well as identifying questions, concerns, and suggestions for augmentations to the DAACS.

As institutions serve more students with fewer resources, being able to identify academically at-risk students early in their programs and provide robust academic and motivational supports is critical. Beta-DAACS data increased the accuracy of models predicting student success in their first term by as much as 6.9% over baseline models (Bryer et al., 2020). This is valuable to institutions interested in prioritizing outreach to students and/or monitoring student progress upon beginning coursework.

Our theory of change is based on bioecological systems theory, which describes an environment as a “set of nested structures, each inside the next like a set of Russian dolls” (Bronfenbrenner, 1979, p. 3). Five interrelated layers surround a focal individual – microsystem, mesosystem, exosystem, macrosystem, and chronosystem – and are arranged from systems having the most to the least direct impact on the individual’s development. The influences lie in the setting, individuals, and social interactions within and between these systems (e.g., Neal & Neal, 2013). As shown in Figure 1, our focal individual is the student, including cognitive capacities and socioemotional, and motivational tendencies. Academic advisors and institutions surround the students as part of the educational setting. DAACS components are designed to affect each level in the system, to strengthen interactions between systems and the influences that these interactions have on students’ educational experience.

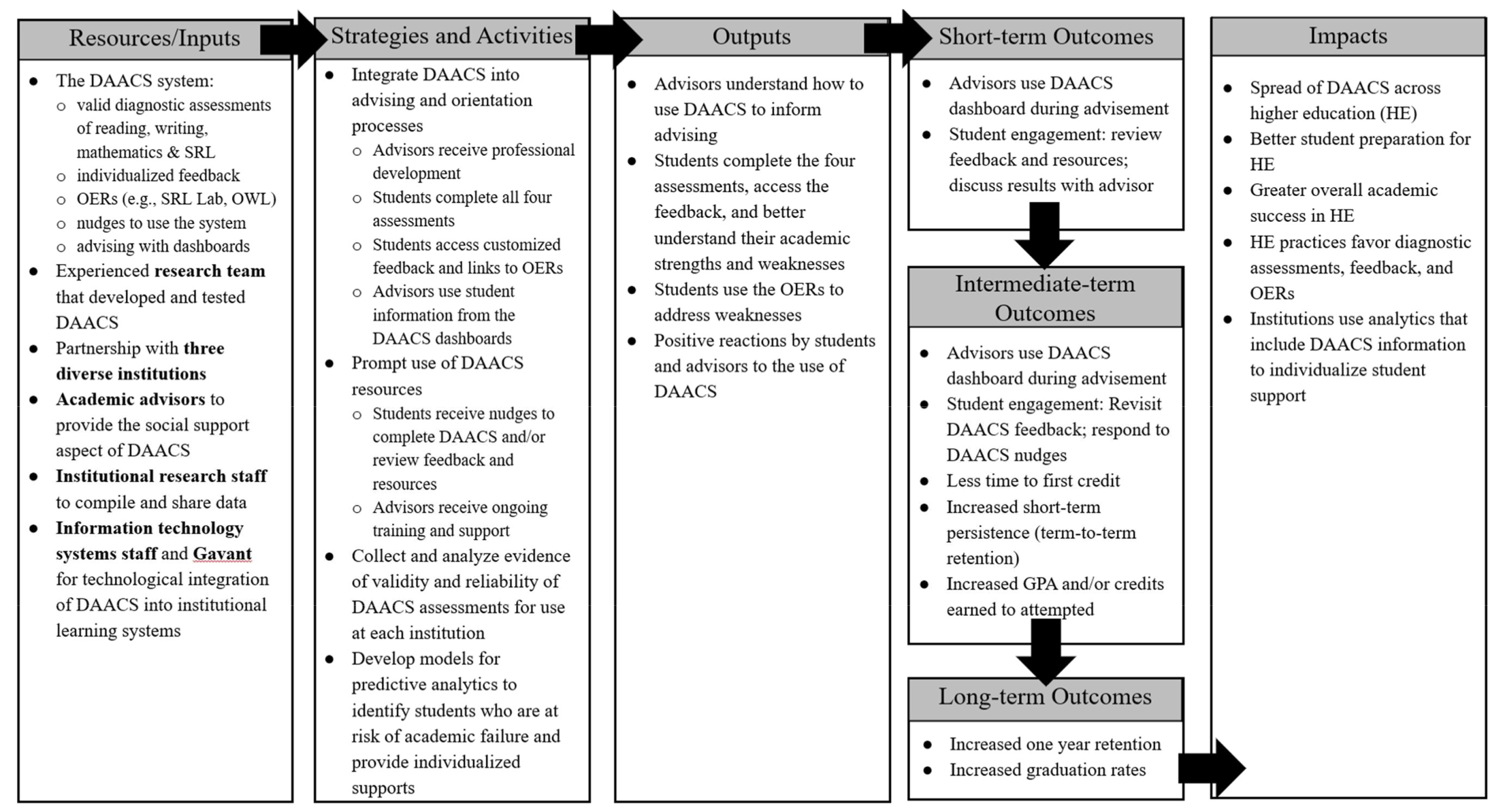

As explained above, DAACS is a research-based intervention that integrates SRL, diagnostic assessments and feedback, social supports, open educational resources, nudges, and predictive modeling in the service of retention and success in higher education. Our logic model (Appendix C-3) summarizes the design of our proposed intervention and how it is expected to lead to short-term, intermediate, and long-term outcomes, at the student level and systemically. We posit that students, as the focal individuals, benefit from information about their academic strengths and weaknesses (e.g., Hattie & Timperley, 2007; Shute, 2008; Wiliam & Thompson, 2007), feedback about how to address deficits with links to useful resources (Hilton, 2016; Hilton, et al., 2014), and guidance from advisors who understand how to use students’ information and feedback during advising (Grubb, 2001). According to SRL theories (Efklides, 2011; Winne & Hadwin, 1998; Zimmerman, 2000), the information provided to students by DAACS will also promote the development of self-regulated learning.

Features designed to leverage the feedback and resources made available by DAACS include nudges to students and advisors, and dashboards and training to guide DAACS-informed advisement. These features are intended to strengthen interactions between advisors and students. As a result, students will develop the skills necessary to persist in school, waste less time in remedial courses, earn more credits, and have higher GPAs. In addition, participating institutions will use DAACS data to more accurately identify students in need of extra support and to individualize the support they receive. As DAACS becomes more widely used, higher education practices will shift away from remediation and toward a more informative and supportive approach based on diagnostic assessment, feedback, and open educational resources.

Figure 2. DAACS Logic Model

Figure 2. DAACS Logic Model